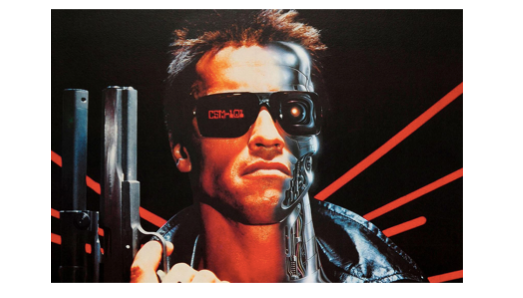

“I’ll Be Back…” AI’s Next & More Dangerous Act?

By Ayman Haydar, CEO MMP WorldWide

I read an article recently that said current generation AI’s are sociopaths. It’s a far cry from the ‘light-hearted’ Chat GPT discussion dominating feeds right now. As talking points go, it’s probably more interesting as well.

We hear a lot about how AI can support, improve, or simplify human tasks, with an undercurrent of caution not to let it become ‘too intelligent’. That’s what we’re dealing with; trying to advance our capabilities with automation but still retain full control, unless of course we want to go the way of the movies and end up in a war against the machines. Got to love Spielberg.

Not so long ago I asked the question: ‘Who would want to be a human today?’ For context, it was examining the role of technology in comparison to our own intelligence, skill and emotions. Surely, man will always fail when pitted against a faster, cleverer machine? It was at a time when people were really fearing the impact of the fourth industrial revolution.

Back in 2019, technology was out to steal our jobs and mutinous robots were about to rebel against their masters. 75 million jobs were about to be displaced in two years, creating further division and mistrust. Fast forward to one global pandemic and economic meltdown later and the argument looks a little different.

We’re still caught up on the best use for technology, especially as the huge digital spike in ecommerce we witnessed during the pandemic starts to slow and we try to find a new baseline. The huge tech layoffs happening right now is testament to that.

To be clear though, this isn’t the work of AI promising a faster, more cost-effective service compared to their human counterpart. No, there’s a lot of factors disrupting the tech landscape right now, from a sluggish economy to changing consumer trends. Decision-makers are left facing the consequences. Calculated human error, pure and simple.

This brings me to the wider point here; humans, by nature, are flawed, which means that to an extent the technology we program, and control is too. Princeton neuroscientist Michael Graziano’s argument in his essay is that without consciousness, AI-powered chatbots are doomed to be dangerous sociopaths, which is a chilling thought.

In their current iteration, systems like ChatGPT can imitate the human mind to an impressive extent, leaning into the more creative and fun side rather than anything sinister at this stage. You want it to write a rap on the future of meat? No problem. An advert delivered in the style of Ryan Reynolds? Sure.

ChatGPT can be savage, it can be funny, it can be direct, and it can be clever. It can be anything you ask it to be. And that’s an issue. It can act without thought. Be coerced. Be manipulated. Give wrong answers with unwavering confidence. In this sense, you can see where Graziano is coming from in his argument in favor of giving AI more agency to be more empathetic and conscious of its actions.

It’s usually around now that someone brings up a Terminator reference, but let’s be honest, this view of a future world dominated by ‘human’ cyborgs feels more comical than threatening in 2023. I think if anything, anthology shows like Black Mirror paint a more realistic portrayal of ‘good tech gone bad’ because it magnifies our reliance on technology to a frightening degree… your worth determined by a social rating system, anyone?

Still, the truth is algorithms run much of our lives today. Over the last few years, responsible AI has gone from something discussed here and there to constantly being in the spotlight. We’re always finding new ways to evolve machine learning to improve efficiencies, enhance creativity and automate processes to save time.

However, as we rely more on AI, there needs to be real discussions on machine consciousness. For now, these products and systems have limited power and uses (depending on their function) but naturally as the technology evolves in line with our own needs and expectations, it’s likely we’ll start asking these systems to make more consequential decisions. Just like we have brand safety in digital advertising, there should be a limit to what AI can be used for.

It’s hard to navigate the idea of regulating and keeping AI in check, especially as the consumer narrative has focused more on the ‘enjoyable’ side of these products. Social platforms Meta and Twitter are reportedly working on their own generative AI, while Snapchat recently rolled out ‘My AI’ – a chatbot that can write a haiku about cheese, among other things. Sounds terrifying.

I’ve always believed technology enables greater creativity and possibilities, but only when guided by the human element. I still think this, however I’m more cautious now of how and why we program this technology as we do. It’s easy to forget you are talking to a computer with an endless memory and no off button.

Snap’s AI is positioned more like a persona rather than a tool for productivity, which could be problematic when you consider the platform’s young audience who might be more inclined to treat it like a friend.

At the end of the day, as much as computers ‘seek’ a life outside of the screen, they are not and will never be human. It’s impossible. So where does that leave us? Some halfway house where we encourage their abilities only so far, or do we continue down this path to a more conscious machine race?

Arnold Schwarzenegger famously made movie history with his ‘I’ll be back’ line. It’s hard to imagine a chatbot having the same impact. Maybe humans are still needed for something after all.